Zhipu AI has officially released and open source GLM-4.5V, a next-generation visual language model (VLM), that can achieve significant development in the state of open multimodal AI. Zhipu-based 1006 billion parameter GLM-4.5-air architecture – A hybrid (MOE) design through Experts (MOE) with 12 billion active parameters – GLM-4.5V provides powerful real performance and unparalleled versatility across visual and text content.

Key features and design innovations

1. Comprehensive visual reasoning

- Image reasoning: GLM-4.5V can achieve advanced scenario understanding, multi-image analysis and spatial recognition. It can explain detailed relationships in complex scenarios (e.g. distinguish product defects, analyze geographical clues, or infer context from multiple images simultaneously).

- Video understanding: Thanks to the 3D convolutional visual encoder, it processes long videos, performs automatic segmentation and recognizes subtle events. This makes applications like storyboards, sports analysis, surveillance reviews and speech summary.

- Spatial reasoning: Integrated 3D rotary position coding (3D rope) gives the model a powerful perception of three-dimensional spatial relationships, which is crucial for explaining visual scenes and grounded visual elements.

2. Advanced GUI and proxy tasks

- Screen reading and icon recognition: The model is excellent in reading desktop/application interfaces, localizing buttons and icons, and assisting with automation – for RPA (Robot Process Automation) and accessibility tools.

- Desktop operation assistance: Through a detailed visual understanding, GLM-4.5V can plan and describe GUI operations, helping users browse software or perform complex workflows.

3. Complex charts and document analysis

- Chart understanding: GLM-4.5V can analyze charts, infographics and scientific charts in PDFS or PowerPoint files, and even extract summary conclusions and structured data from dense, long documents.

- Long file explanation: Backed by tokens that support up to 64,000 multimodal contexts, it parses and summarizes extended, image-rich documents (such as research papers, contracts, or compliance reports), making it ideal for business intelligence and knowledge extraction.

4. Grounding and visual positioning

- Precise grounding: The model can accurately locate and describe visual elements such as objects, bounding boxes, or specific UI elements, using world knowledge and semantic contexts, rather than just pixel-level clues. This allows detailed analysis of quality control, AR applications and image annotation workflows.

Building highlights

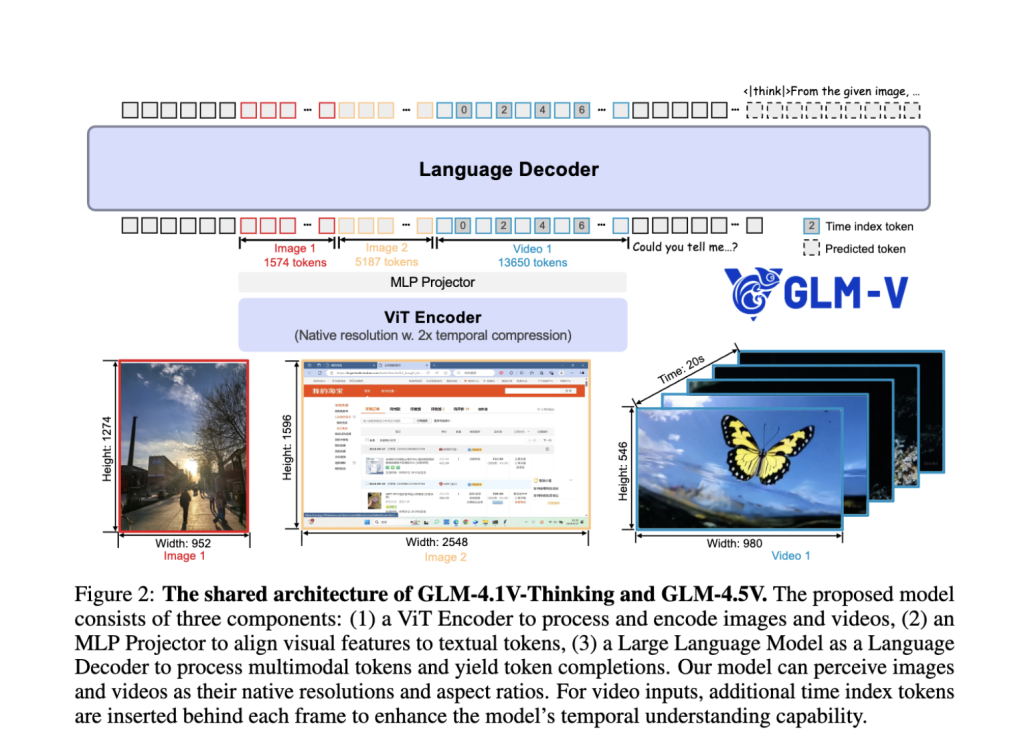

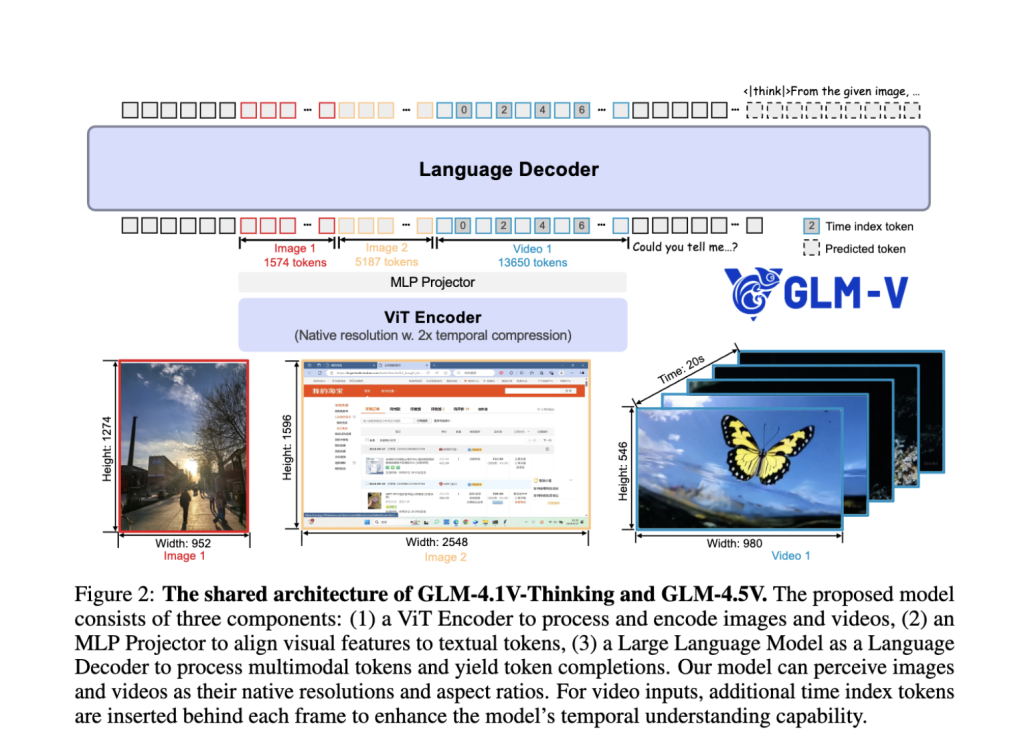

- Hybrid visual language pipeline: The system integrates powerful vision encoder, MLP adapter and language decoder, allowing seamless integration of visual and text information. Still images, videos, GUIs, charts, and documents are all considered first-class input.

- A mixture of Experts (MOE) efficiency: While accommodating 106B total parameters, the MOE design activates only per reasoning 12B, ensuring high throughput and affordable deployment without sacrificing accuracy.

- 3D convolution of video and image: Video input is processed using time downsampling and 3D convolution, allowing high-resolution video and natural aspect ratios to be analyzed while maintaining efficiency.

- Adaptive context length: Supports up to 64K tokens, which can powerfully handle multi-image prompts, concatenated documents and lengthy conversations in one channel.

- Innovative predictions and RL: The training system combines a large number of multi-modal preprocessing, fine-tuning of supervision and Strengthened learning with course sampling (RLC) For long chain reasoning proficiency and real-world tasks.

“Thinking Mode” with Adjustable Depth of Inference

A prominent feature is the “thinking mode” switch:

- Thinking mode is turned on: Prioritize depth, step-by-step reasoning, suitable for complex tasks (e.g., logical deduction, multi-step chart or document analysis).

- Turn off thinking mode: Provides faster speeds, direct answers for routine searches or simple Q&A. Users can control the model’s inference depth, balance speed with interpretability and strictness when reasoning.

Benchmark performance and real-life impact

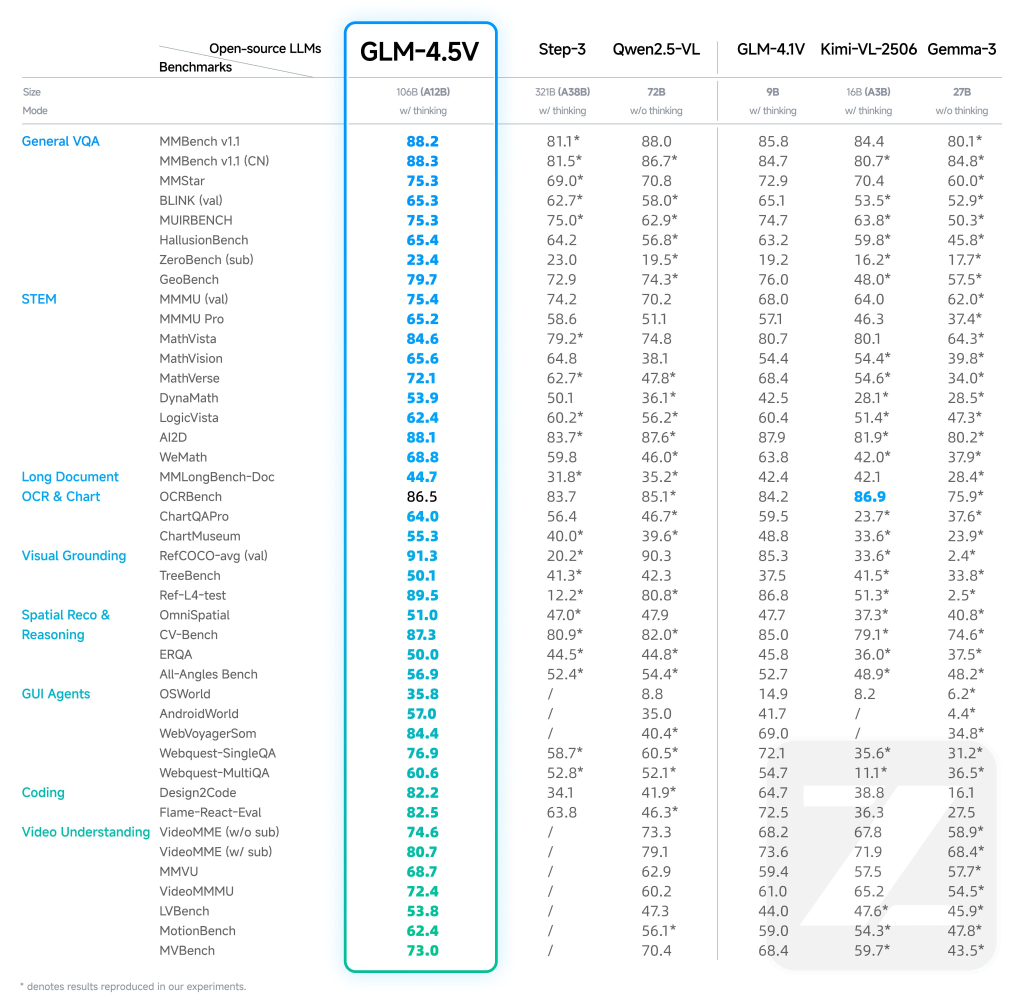

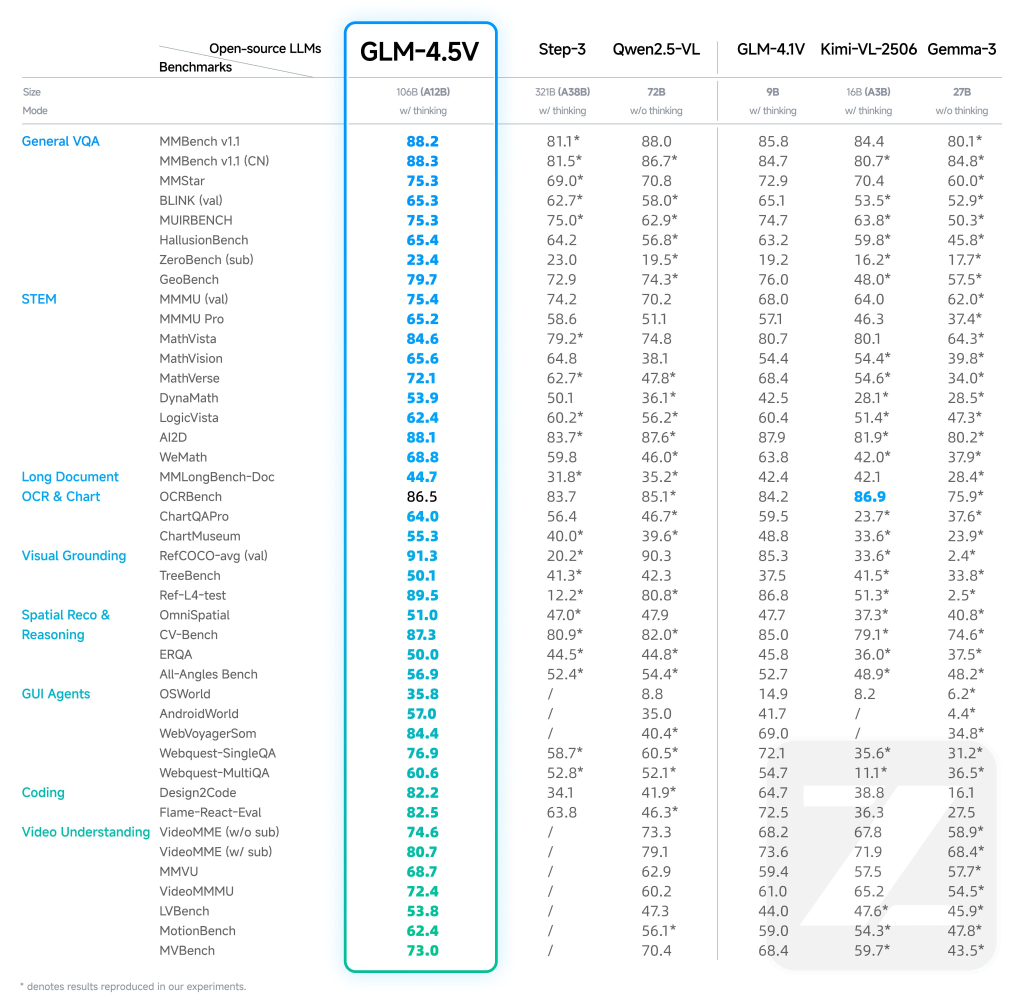

- The most advanced results: GLM-4.5V implements SOTA in 41-42 public multimodal benchmarks, including MMBENCH, AI2D, MMSTAR, MATHVISTA, etc., surpassing open and some advanced proprietary models in categories such as STEM QA, chart understanding, GUI operations, GUI operations and video understanding.

- Actual deployment: Enterprises and researchers report transformative results of defect detection, automated reporting analysis, digital assistant creation and accessibility technologies using GLM-4.5V.

- Democratic multi-modal AI: Under MIT license, the model equally accesses to cutting-edge multimodal inference previously caused by proprietary APIs.

Example Use Cases

| feature | Example usage | describe |

|---|---|---|

| Image Reasoning | Defect detection, moderate content | Scene understanding, multi-image summary |

| Video Analysis | Monitoring, content creation | Long-term video segmentation, event recognition |

| GUI Tasks | Accessibility, automation, quality inspection | Screen/UI readings, icon position, operation suggestions |

| Chart analysis | Finance, research report | Visual analysis, extracting data from complex charts |

| Document parsing | Law, Insurance, Science | Analyze and summarize long illustration documents |

| Grounding | AR, retail, robotics | Target object positioning, spatial reference |

Summary

Zhipu ai’s GLM-4.5V is a flagship open source vision model that sets new performance and usability standards for multimodal reasoning. With its powerful architecture, context length, real-time “thinking patterns” and a broad spectrum of capabilities, GLM-4.5V is redefining the possibilities of enterprises, researchers and developers working in the intersection of vision and language.

Check Paper, model hugging face and GitHub page. Check out ours anytime Tutorials, codes and notebooks for github pages. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.