Meet SmallThinker: A family of effective large language models for local LLMS training

The generated AI landscape is dominated by large-scale language models, often designed for the vast capabilities of cloud data centers. While powerful, these models make it difficult or impossible for everyday users to deploy advanced AI privately and effectively on local devices such as laptops, smartphones, or embedded systems. Rather than compressing the edge cloud-scale model, it often leads to substantial compromises, but the team behind it SmallThinker Ask a more fundamental question: What if the language model builds local constraints from the beginning?

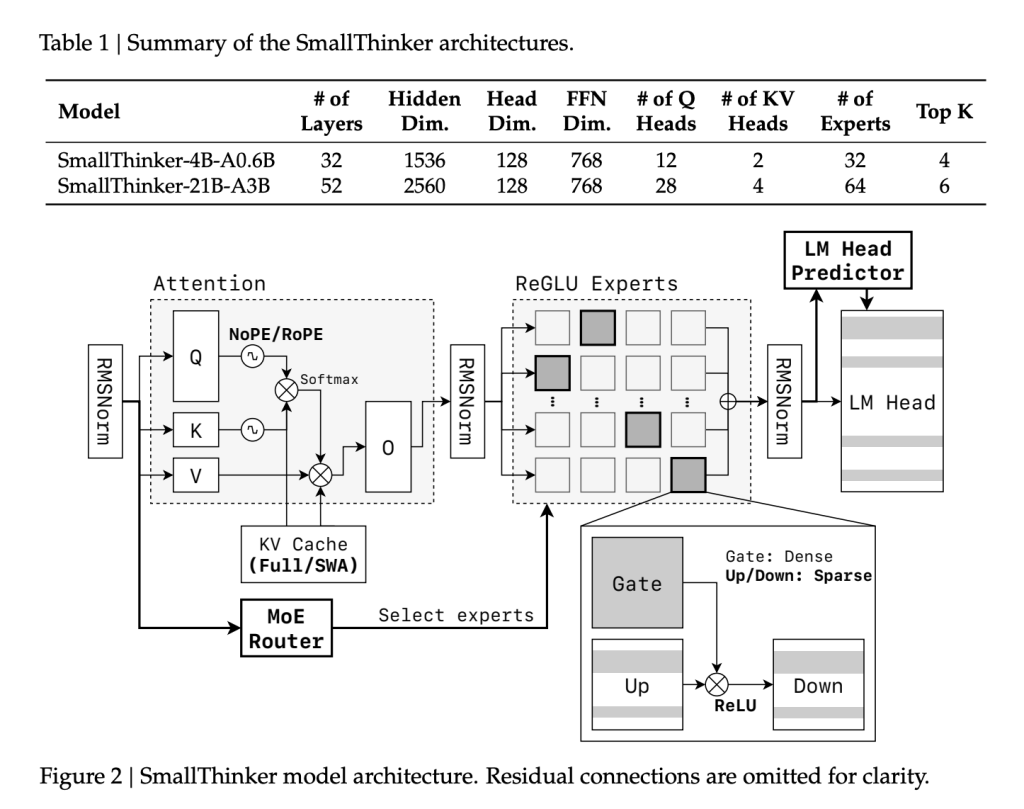

This is the century of creation SmallThinkerThis is a series of hybrid merchant (MOE) models and Zenergize AI developed by researchers at Shanghai Jiao Tong University, which targets high performance, memory constraints and computational constraints. With two main variants – SmallThinker-4B-A0.6B and SmallThinker-21B-A3B, they set new benchmarks for efficient, accessible AI.

Local restrictions become the design principle

Architectural Innovation

Fine Particle Mixture (MOE):

Unlike typical overall LLM, SmallThinker’s backbone has a fine MOE design. Multiple networks of professional experts have been trained, but only one small subset is active For each input token:

- SmallThinker-4B-A0.6B: There are 4 billion parameters in total, and each token has only 600 million times.

- SmallThinker-21B-A3B: There are 21 billion parameters, of which only 3 billion parameters are.

This makes high capacity without memory and computational penalties.

Reglu-based feed sparseness:

Activation sparseness is further achieved using reglu. Even among activated experts, more than 60% of neurons are idle in each inference step, enabling substantial computational and memory savings.

Nope Rope Hybrid’s attention:

For efficient context processing, SmallThinker adopts a novel attention pattern: alternating between the Global Non-Introduction Layer (NOPE) layer and the local rope sliding window layer. This approach supports larger context lengths (4b for 21b and 32K tokens for 16k), but trims the key/value cache size compared to traditional global concerns.

Attention router and smart uninstall:

It is crucial to use on devices to decouple inference speed from slow storage. SmallThinker’s “attention router” predicts which experts are needed before each attention step, so their parameters are prefetched from SSD/Flash in parallel with the calculation. The system relies on “popular” experts in RAM (using LRU policy), while less-used experts are still in fast storage. This design essentially hides I/O lag, and can maximize throughput even with minimal system memory.

Training systems and data procedures

In the course, small models are retrained rather than distilled, which ranges from general knowledge to highly specialized stemming, math and coded data:

- The 4B variant handled 2.5 trillion tokens; the 21b model saw 7.2 trillion.

- Data comes from a well-planned open source collection, enhanced synthetic mathematical and code datasets, and supervised guidance follower corpus.

- Methodology includes quality filtering, MGA-style data synthesis and personality-driven timely strategies, especially to improve performance in areas with heavier reasoning.

Benchmark results

About Academic Tasks:

SmallThinker-21B-A3B Although activation parameters are much less than equivalent competitors, in mathematics (Math-500, GPQA-Diamond) to code generation (HumaneVal) to extensive knowledge evaluation (MMLU) (MMLU) (MMLU) (MMLU) (MMLU):

| Model | mmlu | GPQA | Math-500 | ifeval | LiveBench | Human | Average |

|---|---|---|---|---|---|---|---|

| SmallThinker-21B-A3B | 84.4 | 55.1 | 82.4 | 85.8 | 60.3 | 89.6 | 76.3 |

| QWEN3-30B-A3B | 85.1 | 44.4 | 84.4 | 84.3 | 58.8 | 90.2 | 74.5 |

| PHI-4-14B | 84.6 | 55.5 | 80.2 | 63.2 | 42.4 | 87.2 | 68.8 |

| gemma3-12b-it | 78.5 | 34.9 | 82.4 | 74.7 | 44.5 | 82.9 | 66.3 |

The 4B-A0.6B model also outperforms or matches other models active Parameter counting, especially excellent in inference and code.

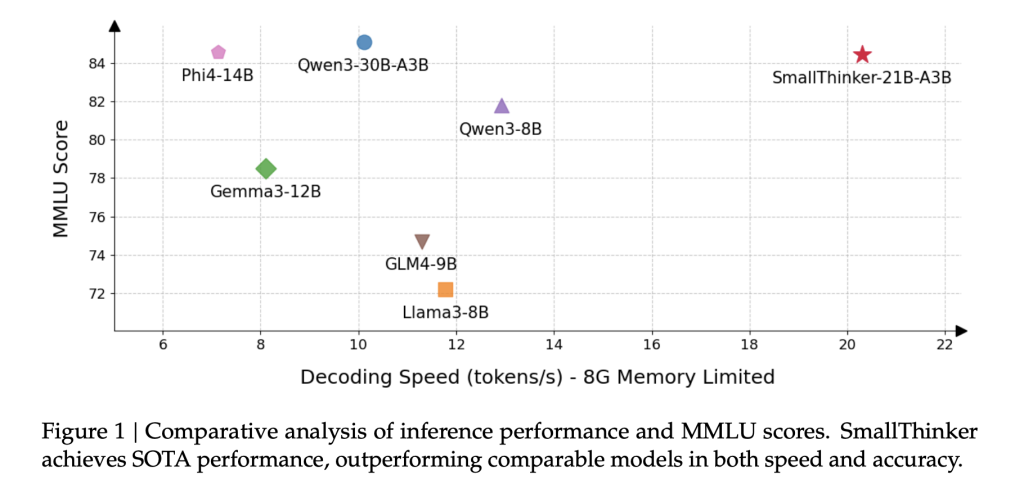

On real hardware:

The real location for SmallThinker to shine is on devices on the memory star:

- The 4B model has a comfortable working comfort with just 1 GIB RAM, while the 21B model has only 8 GIBs without a catastrophic speed drop.

- Pre-summary and caching mean that even under these limitations, inference is still faster and smoother than simply swapping to the baseline model of disk.

For example, the 21B-A3B variant maintains over 20 tokens per second on a standard CPU, while the QWEN3-30B-A3B almost crashes under similar memory constraints.

The impact of sparseness and specialization

Expert professional:

Activation logs show that 70-80% of experts are sparsely used, while a small number of “hotspot” experts at the core have inspired specific fields or languages, the property that enables highly predictable, efficient caching.

Sparseness of neuron levels:

Even among active experts, the median neuronal inactivity rate is over 60%. The early days are almost completely sparse, while deeper layers retain this efficiency, which illustrates why SmallThinker manages to do so much with very few calculations.

System limitations and future work

Although achievements are substantial, Smallthinker is not without warnings:

- Training set size: Its pre-trained corpus, while huge, is still smaller than the corpus behind some border cloud models – limiting generalization in rare or obscure domains.

- Model Alignment: Apply only fine-tuning of supervision; unlike leading cloud LLM, there is no reinforcement learning using human feedback, which may leave some security and help gaps.

- Language Coverage: English and Chinese have STEM, leading training – other languages may degrade quality.

The authors expect to extend the dataset and introduce the RLHF pipeline in future releases.

in conclusion

SmallThinker Represents the fundamentality of the tradition with the “edge cloud model”. Starting with locally-first constraints, it can provide high functionality, high speed and low memory through building and system innovation. This opens the door to private, responsive and capable AI on almost any device, democratizing high-level language technology to a wide range of users and use cases.

These models – SmallThinker-4B-A0.6B instruction and SmallThinker-21B-A3B-Instruct – are available for free for researchers and developers and can prove the possibility that model design is driven by deployment reality, not just data center ambitious.

Check Paper, SmallThinker-4B-A0.6B Teaching and SmallThinker-21B-A3B-Instruct. random View various applications in our tutorial page on AI Agents and Agent AI. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.