NVIDIA AI Development Team Releases Llama Nemotron Super V1.5: Setting New Standards in Inference and Proxy AI

The AI landscape continues to develop rapidly, with breakthroughs that drive the scope that models can achieve in terms of reasoning, efficiency and application versatility. The latest version of Nvidia – Llama Nemotron Super V1.5– Excellent performance and availability have significant leap forward, especially for proxy and reason-intensive tasks. This article explores the technological advancements and practical implications of Llama Nemotron Super V1.5, which is designed to enhance developers and enterprises with cutting-edge AI capabilities.

Overview: Llama Nemotron Super V1.5 in the context

Nvidia’s Nemotron family is known for its strongest open source big-word models and enhances them with improved accuracy, efficiency and transparency. Llama Nemotron Super V1.5 is the latest and most advanced iteration, explicitly designed for high-risk inference solutions such as math, science, code generation and proxy functions.

What sets up Nemotron Super V1.5?

The model aims to:

- Provides state-of-the-art accuracy Science, Mathematics, Coding and Agent Tasks.

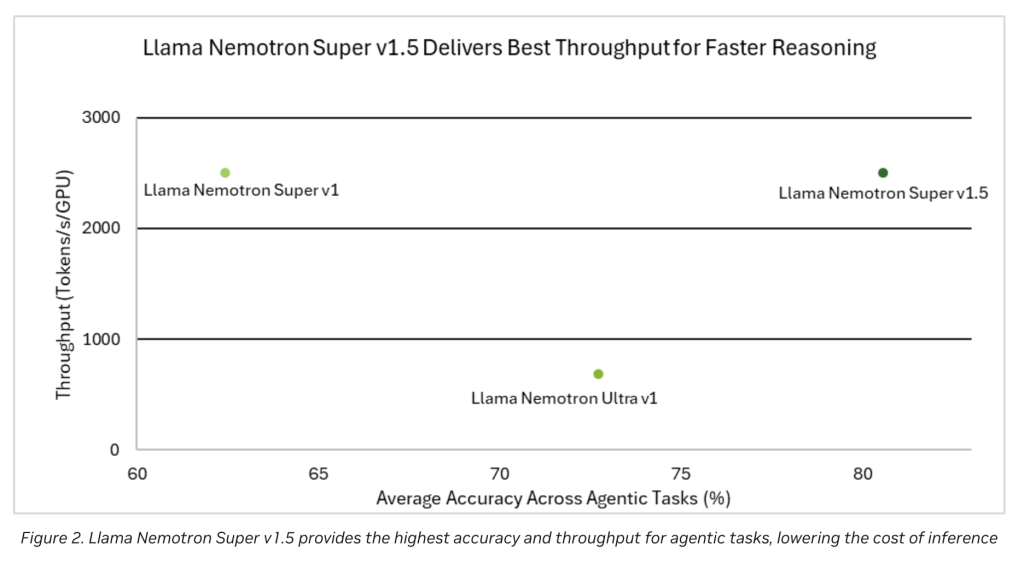

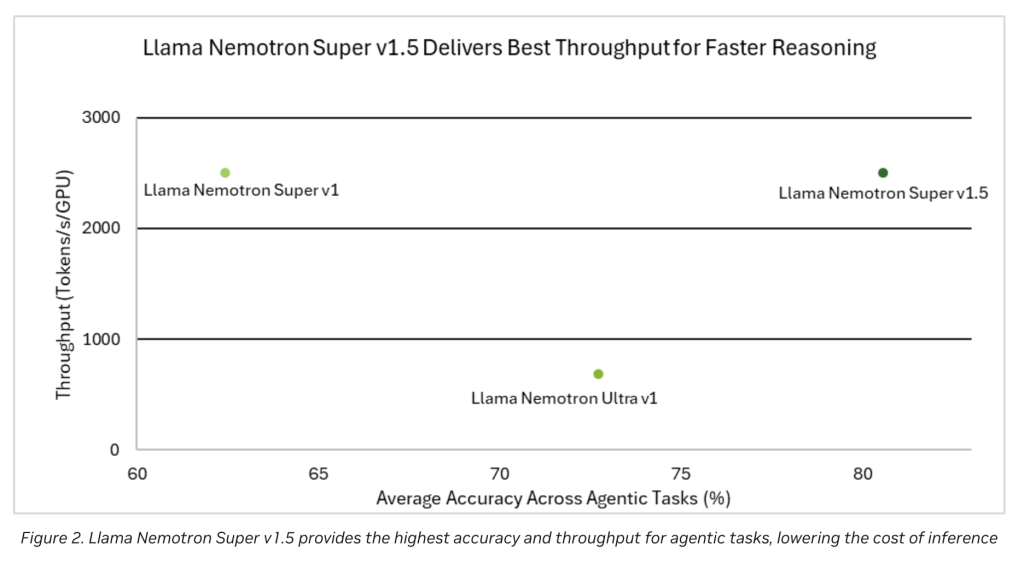

- accomplish 3 times higher throughput Deployment is faster and more cost-effective than previous models.

- Effectively in Single gpufrom individual developers to enterprise-scale applications.

Technological innovation behind the model

1. Refining after training of high signal data

Nemotron Super V1.5 is based on effective reasoning established by Llama Nemotron Ultra. The progress of Super v1.5 comes from Refinement after training using new proprietary datasetswhich focuses mainly on high signal reasoning tasks. The data targeted amplifies the functionality of the model in a complex multi-step problem.

2. Neural building search and trim for efficiency

The major innovation in v1.5 is Using Neural Building Search and Advanced Trimming Techniques:

- By optimizing the network structure, NVIDIA increases throughput (inference speed) without sacrificing accuracy.

- Now, models execute faster, allowing for more complex inference costs per unit to compute and keep lower inference costs.

- The ability to deploy on a single GPU minimizes hardware overhead, allowing powerful AI access to smaller teams as well as large organizations.

3. Benchmarks and performance

In a wide range of public and internal benchmarks, Llama Nemotron Super V1.5 always leads its weight categoryespecially in tasks required:

- Multi-step reasoning.

- Structured tools are used.

- Explain the following code synthesis and proxy workflows.

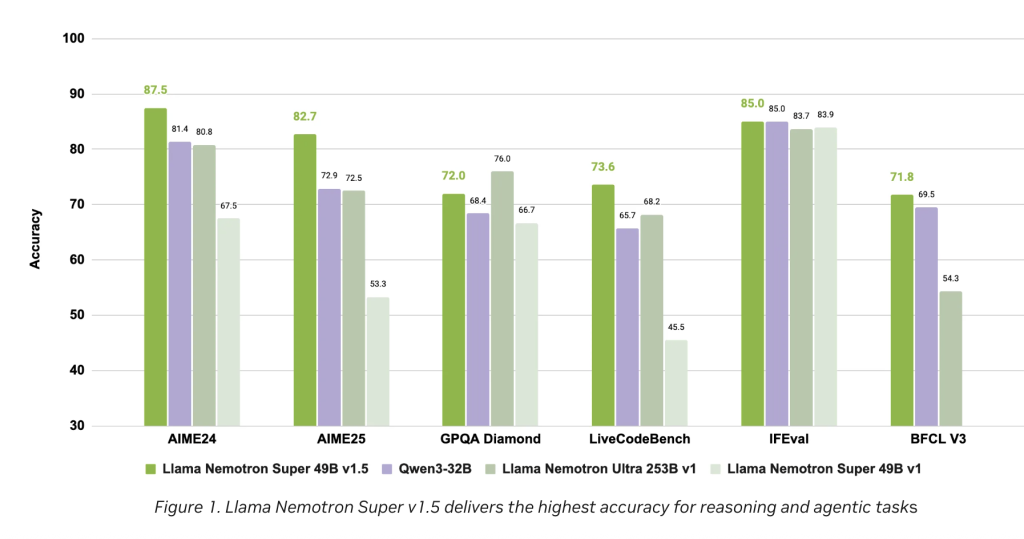

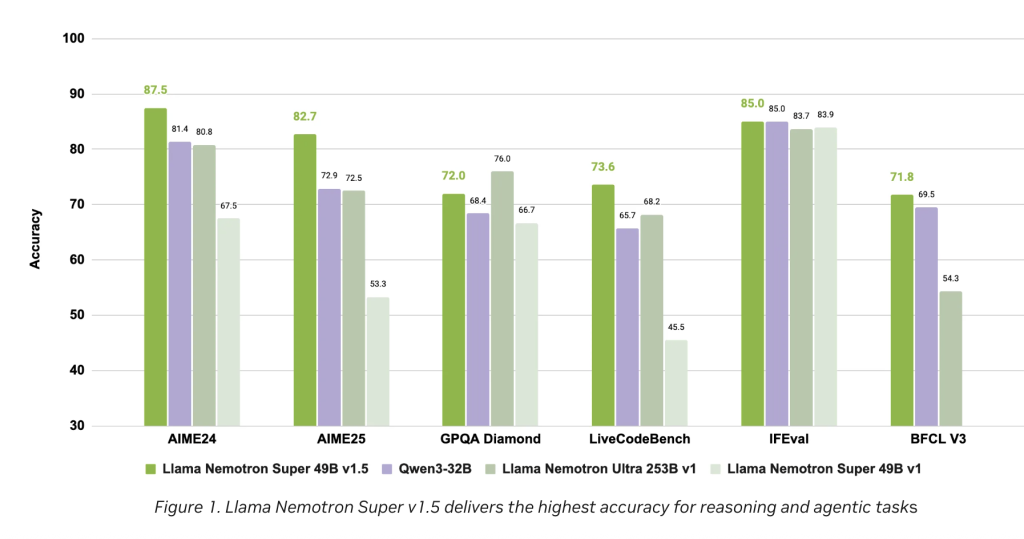

Performance diagrams (see Figures 1 and 2 in the release notes) are evidently proven:

- The highest accuracy rate for core reasoning and proxy tasks Similar to the leading open model.

- Maximum throughputconverting lower operating costs into faster processing and inference.

Key features and benefits

The accuracy of reasoning leading

Improvements to the high signal dataset ensure that Llama Super v1.5 performs excellently in answering scientific, complex mathematical problem solving, and complex queries that generate reliable, maintainable code. This is crucial for real-world AI agents that must be reliable in interactions, reasoning and action in applications.

Throughput and operational efficiency

- 3 times high throughput: Optimization allows the model to process more queries per second, making it suitable for real-time use cases and large-capacity applications.

- Lower calculation costs: Effective architecture design and the ability to run a single GPU eliminate the scaling barriers for many organizations.

- Reduce deployment complexity: By minimizing hardware requirements while improving performance, deployment pipelines can be simplified on the platform.

Build for proxy applications

Llama Nemotron Super V1.5 is not only about answering questions, but also about Agent Tasks,If the AI model needs to be proactive, follow the instructions, call functions and integrate with the tools and workflows. This adaptability makes the model:

- Session proxy.

- Autonomous code assistant.

- Science and research AI tools.

- Intelligent automation agents are deployed in enterprise workflows.

Actual deployment

The model is Available now Perform practical experience and integration:

- Interactive access: Directly on Nvidia Build (build.nvidia.com), allows users and developers to test their functionality in real-time scenarios.

- Open model download: Available in front of embraces, ready to be deployed in custom infrastructure or included in a wider AI pipeline.

How Nemotron Super V1.5 pushes the ecosystem forward

Open weight and community impact

Continuing Nvidia’s philosophy, Nemotron Super V1.5 was released as an open model. This transparency promotes:

- Fast community-driven benchmarking and feedback.

- Special domains are easier to customize.

- Carry out greater collective review and iteration to ensure a full emergence of trustworthy and powerful AI models.

Business and research preparation

Super V1.5 is tailor-made for the The backbone of the next generation of AI agents exist:

- Enterprise knowledge management.

- Customer support automation.

- Advanced research and scientific computing.

Align with AI best practices

By combining High-quality synthetic datasets Nemotron Super V1.5 from NVIDIA and state-of-the-art model improvement techniques follow the following standards:

- Transparency in training data and methods.

- Strict quality assurance of model output.

- Responsible and explainable AI.

Conclusion: A new era of AI inference models

Llama Nemotron Super V1.5 It is an important step in the open source AI landscape, providing top-level reasoning capabilities, transformational efficiency and broad applicability. For developers who are designed to build reliable AI agents, whether for individual projects or complex enterprise solutions, this release marks a milestone, setting new standards with accuracy and throughput.

With NVIDIA’s ongoing commitment to openness, efficiency and community collaboration, Llama Nemotron Super V1.5 is expected to accelerate the development of intelligent, powerful AI agents designed for the various challenges of tomorrow.

Check Open source weight and Technical details. All credits for this study are to the researchers on the project. Also, please stay tuned for us twitter And don’t forget to join us 100K+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.