Robobrain 2.0: AI-type AI that is integrated into the next generation of visual language models for advanced robotics

Advances in artificial intelligence are rapidly closing the gap between digital reasoning and the interaction between real-world. At the forefront of this advancement, it embodies AI, the focus of the field is to enable robots to perceive, rationally and act effectively in a physical environment. As the industry wants to automate complex spatial and temporal tasks, from home assistance to logistics, it becomes crucial to have an AI system that truly understands its surroundings and plans to act.

Introducing Robobrain 2.0: Embodying the breakthrough of visual language AI

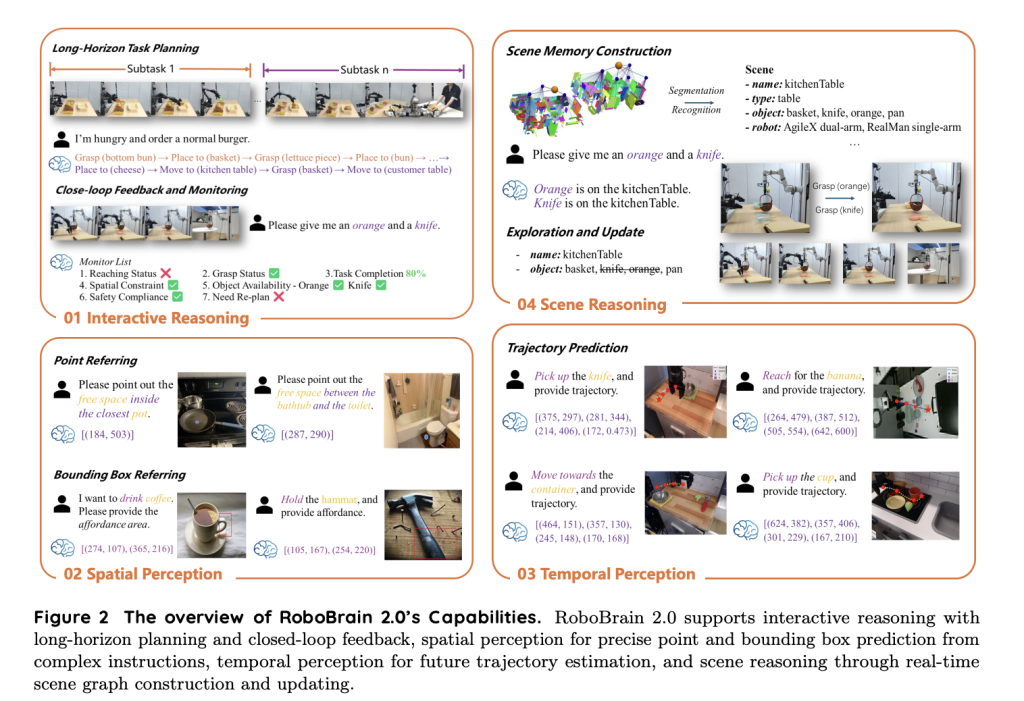

Developed by Beijing Institute of Artificial Intelligence (BAAI), Robobrain 2.0 Marking a major milestone in the design of basic models for robotics and specific artificial intelligence. Unlike traditional AI models, Robobrain 2.0 unifies spatial perception, advanced reasoning and long-term planning in a single architecture. Its versatility supports a variety of specific tasks such as burdensome prediction, spatial object positioning, trajectory planning, and multi-agent collaboration.

Key Highlights of Robobrain 2.0

- Two extensible versions: Provides fast, resource-efficient 7 billion parameter (7B) variants and powerful 3.2 billion parameter (32B) models for more demanding tasks.

- Unified multi-modal architecture: Fuses high-resolution visual encoder with a decoder-only language model, allowing seamless integration of images, videos, text descriptions, and scene diagrams.

- Advanced spatial and temporal reasoning: Expertise in tasks that require understanding object relationships, motion prediction, and complex multi-step planning.

- Open Source Foundation: Robobrain 2.0 is built using the FlagScale framework and is designed to be easy to study, repeatability and practical deployment.

How Robobrain 2.0 works: Architecture and Training

Multi-mode input pipeline

Robobrain 2.0 ingests various combinations of sensory and symbolic data:

- Multi-view images and videos: Supports high resolution, self-centered and third-person visual streams for a rich spatial environment.

- Natural Language Description: From simple navigation to complex operating instructions, explain various commands.

- Scene diagram: Process the structured representation of objects, their relationships and environment layout.

Systematic Token Coding language and scene diagrams, and professional Visual Encoder Using adaptive position coding and window attention is effective for process visual data. Visual features are projected into the space of the language model through multi-layer perceptrons, thereby achieving a unified multi-modal token sequence.

Three-stage training process

Robobrain 2.0 realizes its intelligence through an advanced three-phase training course:

- Basic space-time learning: Establish core visual and linguistic abilities, basic spatial perception, and basic temporal understanding.

- Reflect task enhancement: Use real-world, multi-video video and high-resolution datasets to perfect the model to optimize the tasks of 3D affordable detection and robot-centric scene analysis.

- Deliberate reasoning: Using various activity traces and task decompositions to integrate interpretable step-by-step reasoning, this provides powerful decision-making for powerful decisions in long-distance, multi-agent scenarios.

Scalable research and deployment infrastructure

Robobrain 2.0 utilization Flag engraving Platform, release:

- Mixed parallelism Efficient use of computing resources

- Pre-allocated memory and high-throughput data pipelines Reduce training costs and delays

- Automatic fault tolerance Ensure the stability of large-scale distributed systems

This infrastructure allows for rapid training, simple experimentation and scalable deployment in real-world robotic applications.

Real-world applications and performance

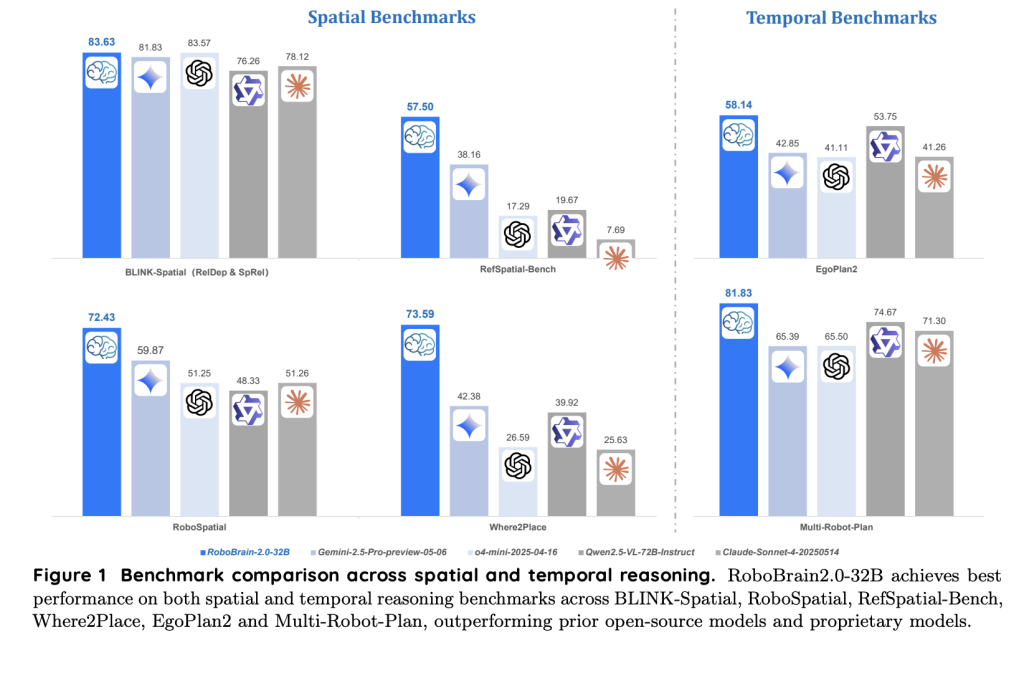

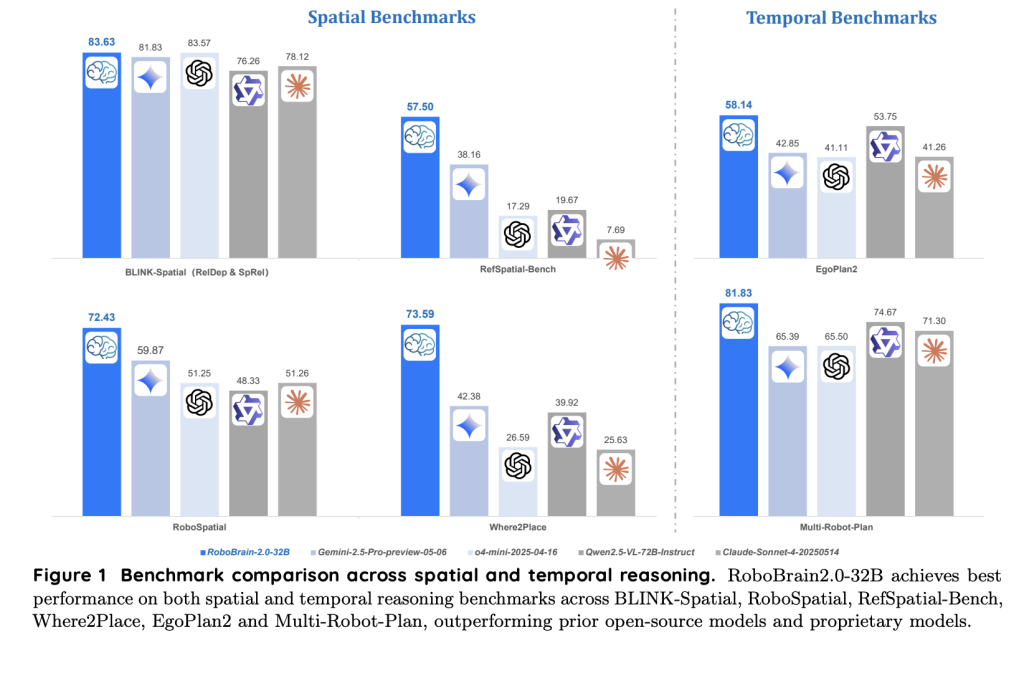

Robobrain 2.0 is evaluated on a range of embodied AI benchmark suites, consistently surpassing open source and proprietary models in spatial and temporal reasoning. Key features include:

- Burden forecast: Identify functional object areas for grasping, pushing or interacting

- Precise object localization and pointing: Accurately follow text instructions to find and point to objects or empty spaces in complex scenes

- Trajectory prediction: Plan effective, obstacles ultimately affect movement

- Multi-agent plan: Decompose tasks and coordinate multiple robots to achieve collaborative goals

Its powerful open access design makes Robobrain 2.0 immediately available for home robotics, industrial automation, logistics and other applications.

Reflect the potential of AI and robotics

Through unified vision language understanding, interactive reasoning and powerful planning, Robobrain 2.0 sets new standards for the embodied AI. Its modular, scalable architecture and open source training recipes facilitate innovation in the robotics and AI research communities. Whether you are a developer of architectural intelligence assistants, researchers advance AI programs, or engineers automate real-world tasks, Robobrain 2.0 provides a strong foundation for addressing the most complex spatial and time challenges.

Check Paper and Code. All credit to this study is attributed to the researchers on the project | Get to know AI development communication read 40K+ developers And researchers from NVIDIA, OpenAi, DeepMind, Meta, Microsoft, JP Morgan Chase, Amgan, Aflac, Aflac, Wells Fargo and 100s [SUBSCRIBE NOW]

Nikhil is an intern consultant at Marktechpost. He is studying for a comprehensive material degree in integrated materials at the Haragpur Indian Technical College. Nikhil is an AI/ML enthusiast and has been studying applications in fields such as biomaterials and biomedical sciences. He has a strong background in materials science, and he is exploring new advancements and creating opportunities for contribution.